How RoboHearts AI Works

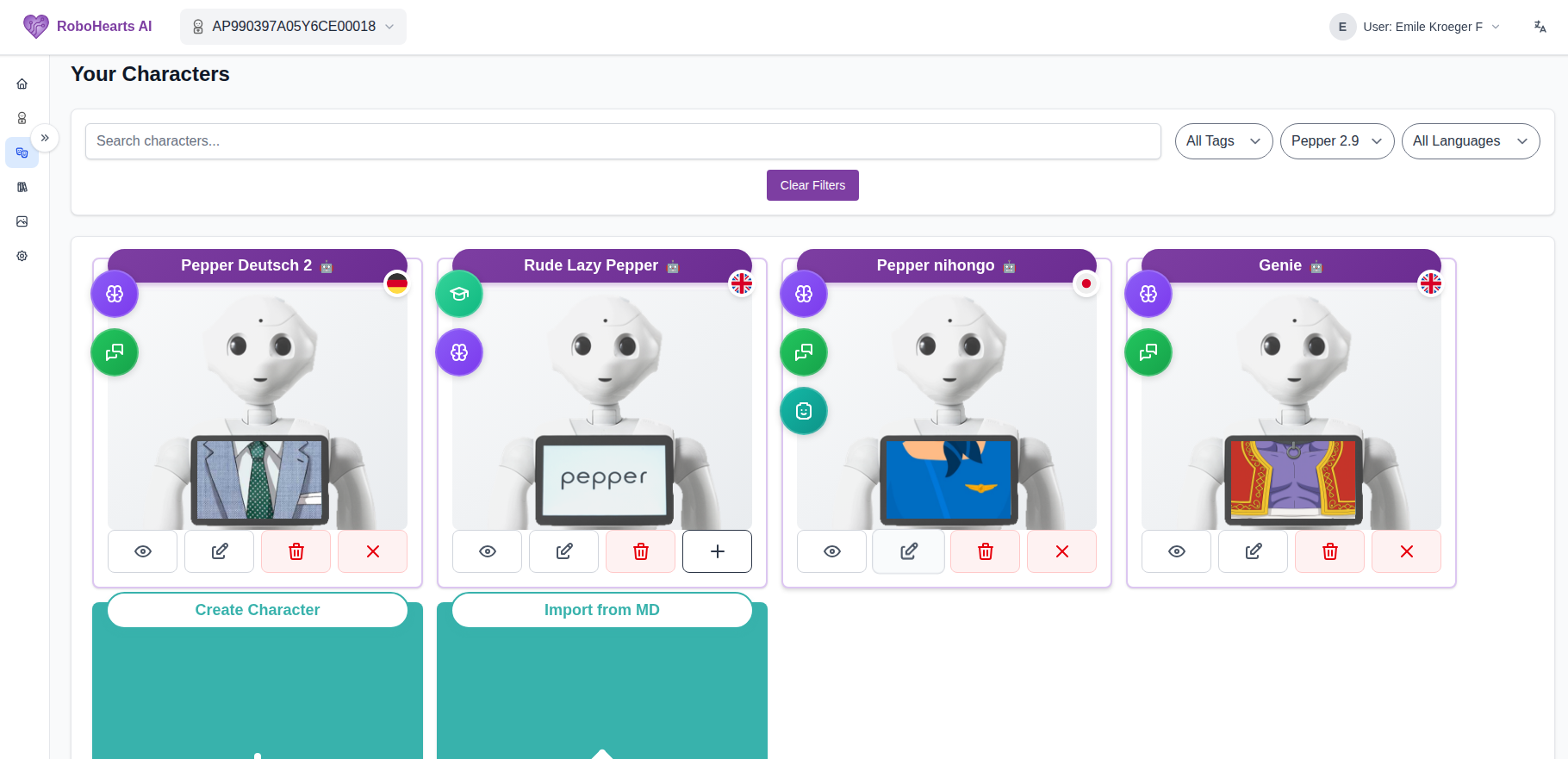

Use the Character Editor to build multilingual and multimodal interactions.

What Is RoboHearts AI?

RoboHearts AI is a multilingual conversational platform for NAO and Pepper robots. Create characters through a web interface, pair your robot, and run conversations powered by AI, scripted dialogue, or both.

The platform handles speech recognition, language models, voice synthesis, and multimodal robot control. You give the robot instructions like you would to an intern and it takes care of the conversation and behaviour.

Creating Characters

Write who your robot should be in plain language. No coding required.

The character editor lets you create and manage multiple AI characters. Each character is defined by the instructions you write—essentially describing a personality, role, or scenario.

These simple instructions are enough. The AI keeps the character on track, understands questions, and generates appropriate responses.

Character Types

QiChat characters – Scripted dialogue using Aldebaran’s QiChat syntax. Works offline and is available on the free tier; ideal for structured, predictable interactions.

LLM-powered characters – AI-driven conversation using OpenAI models. You can layer QiChat rules on top—if a rule matches, Pepper responds with that; otherwise the LLM takes over.

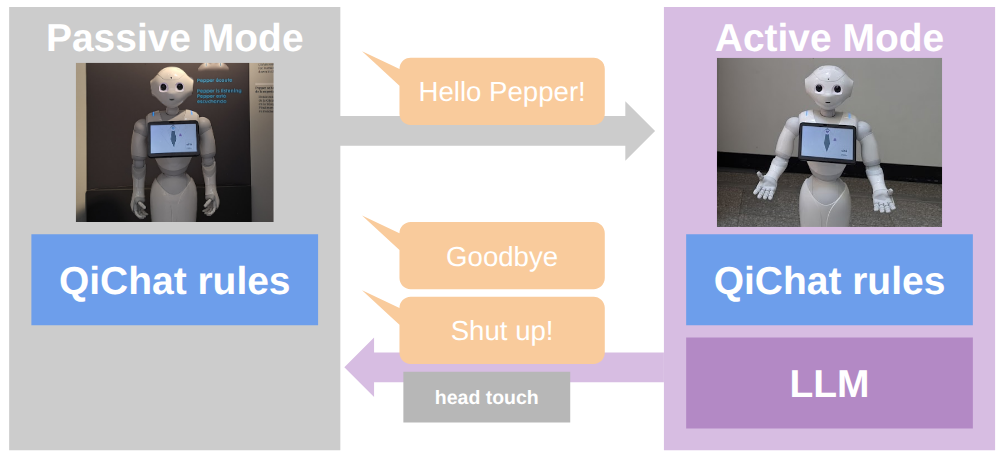

Pepper Interaction Modes

Pepper can be in passive or active mode. This lets it stay present without interrupting, yet respond immediately when needed.

Passive Mode

Pepper listens but only responds to wake phrases or QiChat rules. Say “Hello Pepper!” to activate it or trigger any QiChat rule you’ve defined.

Active Mode

Pepper responds to everything you say:

- QiChat rules are checked first—if something matches, that response is used.

- If nothing matches, the LLM generates a reply (for LLM-powered characters).

- Say “Goodbye” or touch the head sensor to return to passive mode if Pepper is getting too chatty.

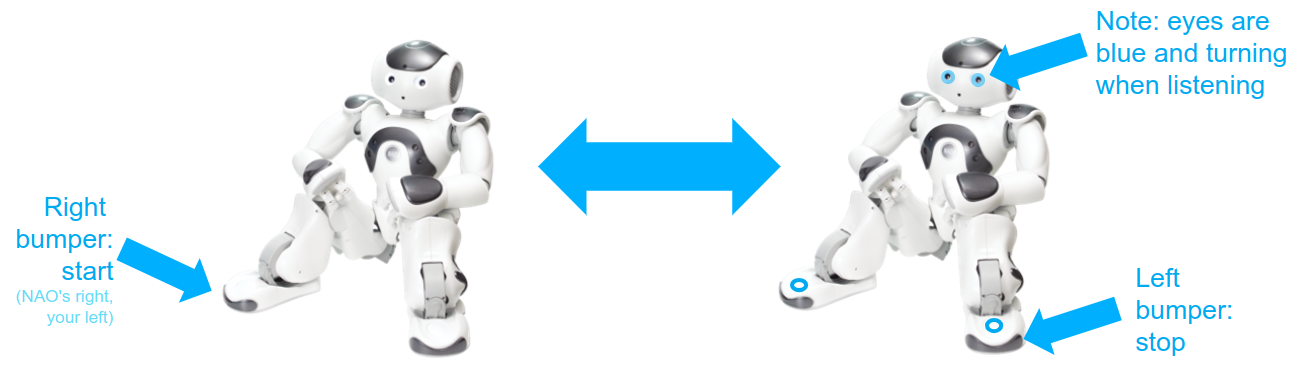

NAO Interaction Modes

Once a character is loaded, use the bumper sensors to control dialogue.

- Right bumper (NAO’s right, your left): Start dialog mode—NAO’s eyes turn blue and it begins listening.

- Left bumper (NAO’s left, your right): Stop dialog mode—NAO exits the conversation.

- While in dialog mode, NAO listens, processes speech, and responds. QiChat rules are active the whole time.

Multilingual Support

Seventeen languages are supported. Characters can switch languages mid-conversation using emoji-based commands (like a flag emoji). Both QiChat and LLM modes work in every supported language.

Robot Skills & Capabilities

Beyond speaking, RoboHearts AI can orchestrate multimodal robot actions:

- Animations and gestures – Wave, point, dance, and express emotions.

- Tablet display (Pepper) – Show images, text, floor plans, or conversation prompts.

- Interactive suggestions – Offer touch-ready responses on Pepper’s tablet to help conversations flow.

The AI decides when to use these skills based on context and the character instructions. A museum guide can automatically pop a floor plan on the tablet when giving directions.